Session Summary

Context and Intent

This session continued the development of a closed, collaborative compositional system between Dr. Michael Rhoades and ChatGPT using Csound. The focus was on clarifying core terminology, reviewing diagrams and conceptual frameworks, formalizing evaluation procedures, and preparing for the next phase of work (01.interactions). The long-term goal remains to build a closed AI system that generates Csound scores solely from materials, constraints, and feedback provided within this collaboration.

Core Compositional Concepts

Composition as Composite Waveform. A musical composition is understood as a composite digital waveform, constructed from the bottom up via Csound score events. Csound is the medium through which these waveforms are programmatically created.

Event. An event is defined as one line of the Csound score (one score statement). It is loosely analogous to a note, but much more densely parametric, including start time, duration, frequency-related parameters, amplitude, envelopes, spatial parameters, routing, and other p-fields.

Density:

Density is primarily determined by the amount of overlap among events in time. A low-density passage has events separated by quiet spaces with little or no overlap; a high-density passage features event durations that extend over the start times of subsequent events, producing overlapping activity. Density can be viewed as a ratio between event durations and their start-time structure—how much of the time axis is occupied by active sound. Deliberate modulation of density over time (low, medium, high and transitions between them) is considered a fundamental formal element.

Rhythm:

Rhythm is treated as an emergent property arising from event start times, durations, overlaps, and envelope shapes, rather than from traditional metric patterns or beats.

Frequency Relationships:

The focus is not on notes and melodies, but on events and frequency relationships: ratios and interactions among frequencies (simultaneous and successive), and their distribution across the spectrum over time.

Counterpoint as Parametric Interaction. Counterpoint is understood as the interaction of independently evolving parameter trajectories (frequency, amplitude, spectral brightness/noisiness, spatial position, etc.), rather than traditional melodic line-writing.

Stochastic Exploration and Emergent Quanta:

The compositional process is highly stochastic. Randomness within designed constraints is used to generate material that can genuinely surprise the composer. Dr. Rhoades often describes his role as a “miner for emergent quanta”: designing probabilistic systems to uncover striking events, textures, and interactions. When such emergent structures appear, they are “brought out to play” and further developed.

Diagrams and Conceptual Frameworks

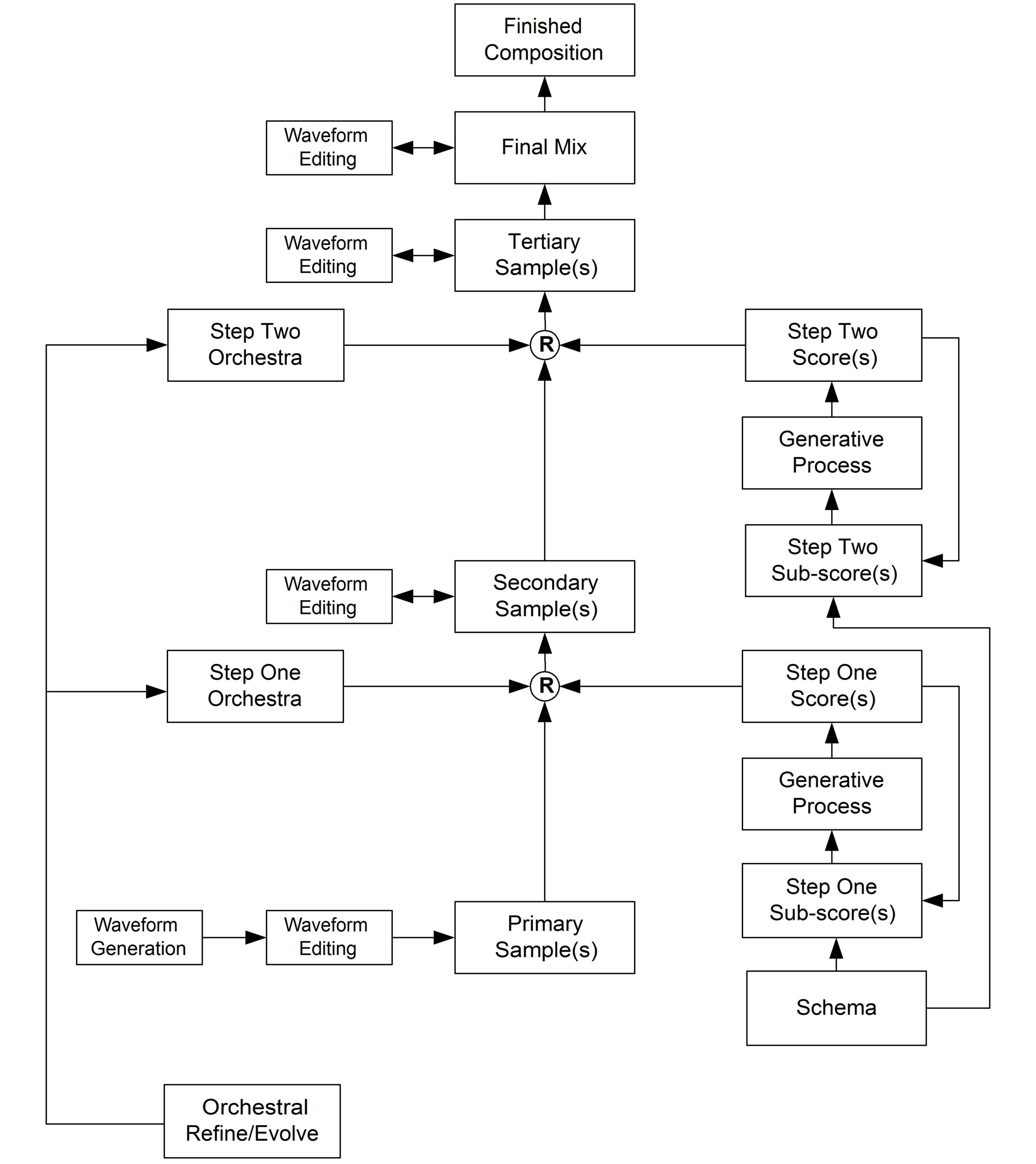

Generative Algorithmic Paradigm:

The diagram below reviewed showing a multi-stage process: waveform generation and editing leading to primary, secondary, and tertiary sample layers; Step One and Step Two orchestras driven by Step One and Step Two scores; and final waveform editing and mixing. Csound rendering stages (marked “R”) transform scores and orchestras into new audio material at each layer. Orchestral refinement/evolution operates as a feedback mechanism as listening and evaluation inform changes to the orchestras and workflows.

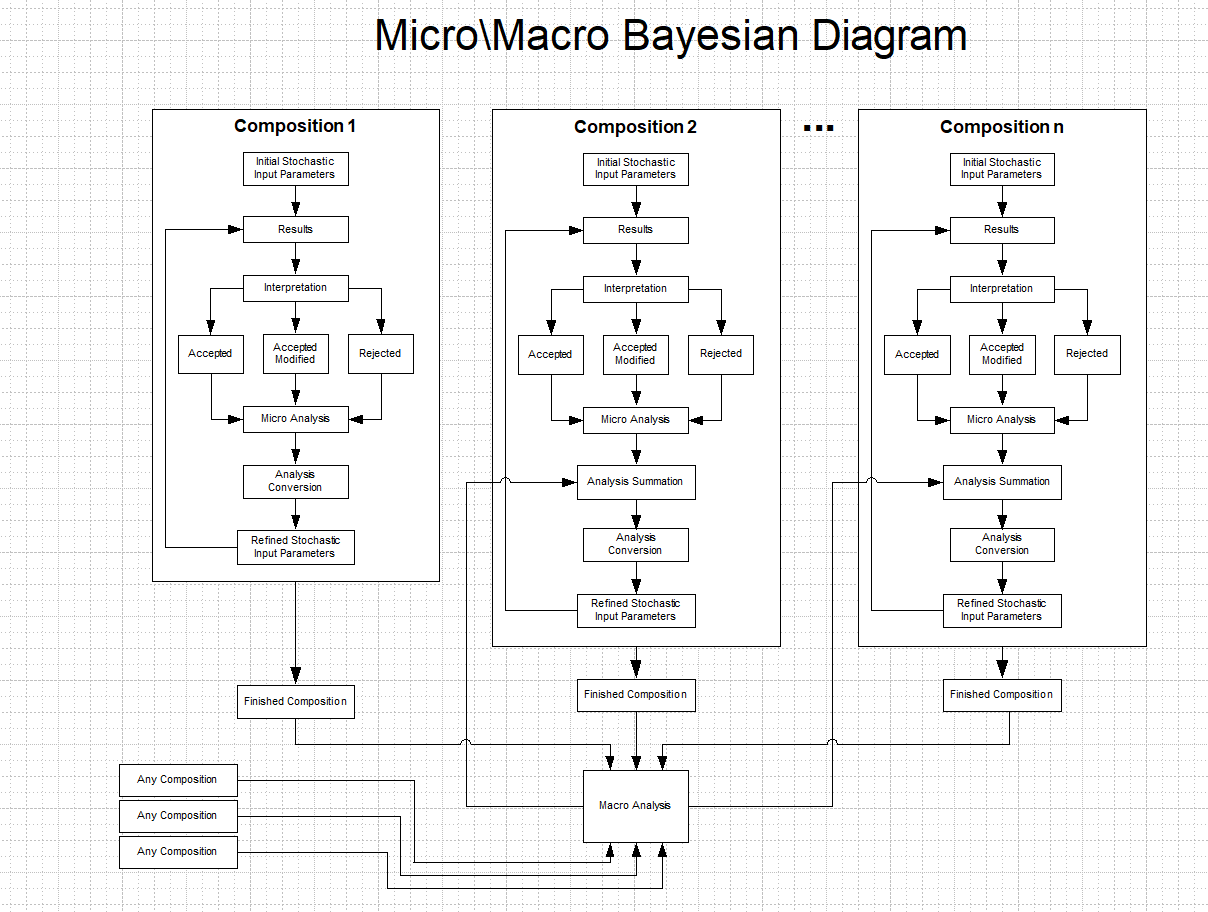

Micro/Macro Bayesian Model:

The Micro/Macro Bayesian diagram below was examined. At the micro level, each composition involves initial stochastic input parameters, generated results, interpretation, categorization of material as accepted/accepted-modified/rejected, micro analysis, and analysis conversion into refined stochastic parameters. At the macro level, finished compositions feed into macro analysis across works, updating higher-level priors for future compositions. This framework closely matches the intended behavior of the ChatGPT–Csound system, with evaluations guiding parameter refinement over successive interactions.

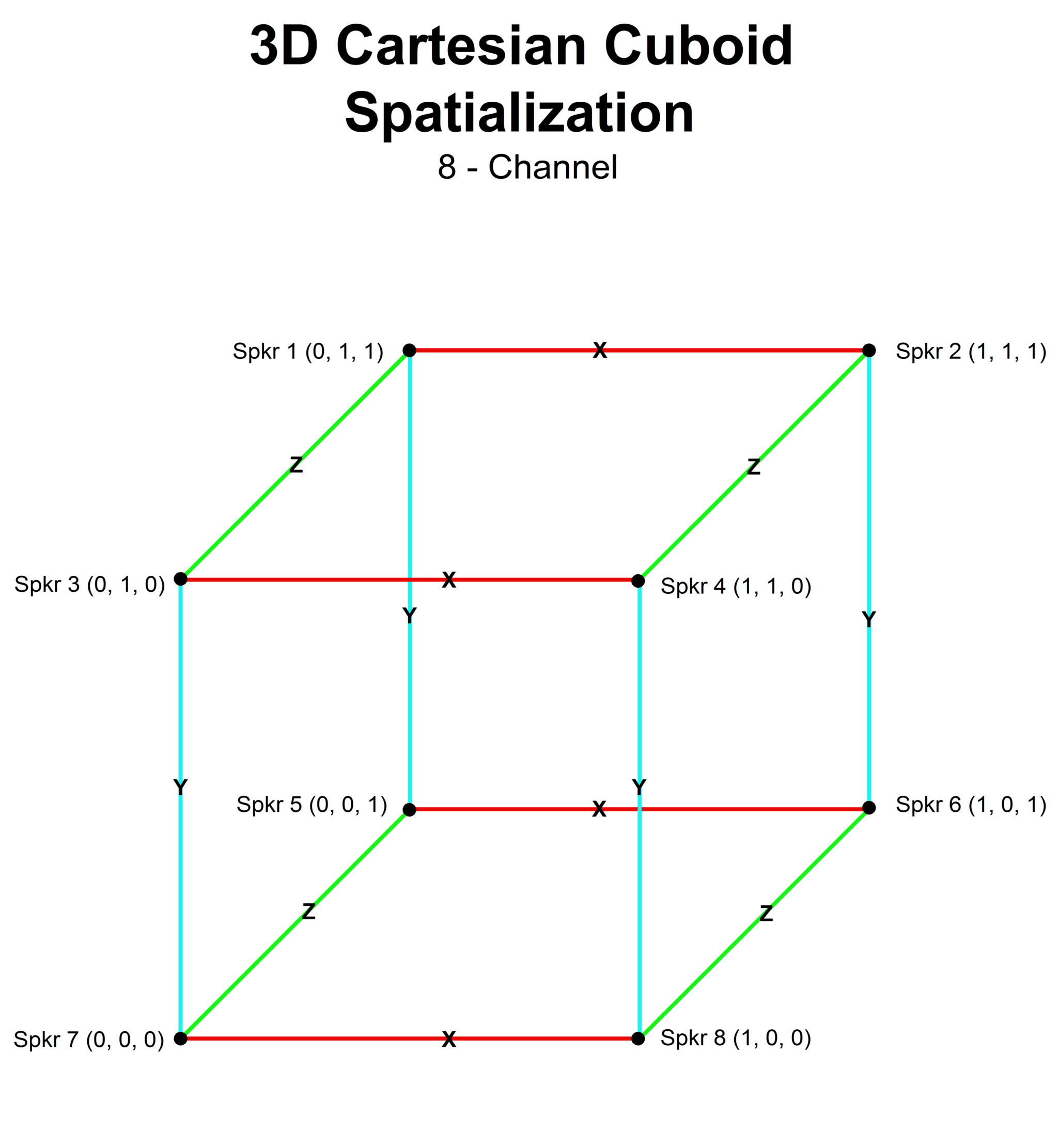

Spatialization:

3D Cartesian Cuboid with Octangulation. The spatialization schema below was reviewed using an 8-channel 3D Cartesian cuboid with loudspeakers at the corners (coordinates in the unit cube). The system implements a vector-based amplitude panning (VBAP-like) approach generalized to 8 loudspeakers (“octangulation”) rather than the usual three, combined with convolution reverb to encode distance. Spatial perception results from the tandem action of amplitude panning across the 8 speakers and distance-related convolution processing. Event positions in (x, y, z) will be mapped to loudspeaker gains and distance algorithms in the Csound orchestra.

Evaluation Scheme and Density Judgments for 00.render

A 0–100 evaluation scale was established for rendered score files. A rating of 0 indicates that no material in the render is acceptable as a contribution toward a composition. A rating of 100 indicates that all material is acceptable. When the rating is greater than 0, time ranges of acceptable and unacceptable material may be marked for more detailed analysis. In some cases, only an overall rating will be provided; in others, both overall and sectional feedback will be given.

In this session, the render from the first interaction (00.render) was assigned a rating of 0. The reason was its monotonous density profile: it lacked meaningful alternation between low, medium, and high densities. This was judged to be formally uninteresting, given that the interplay of varying densities is considered a fundamental structural element. Low densities provide relief, punctuation, and moments of release; high densities afford rich washes and complex interactions. The absence of such variation led to a complete rejection of 00.render as a useful contribution.

Technical Clarifications from Interaction_00

Sample Naming and IDs:

Base audio files are named using the convention Soundin.xxx. The trailing digits xxx are the identifiers used in the sub-score and score (e.g., as p10 values), and the Csound orchestra maps these IDs to the corresponding Soundin.xxx samples. The specific numeric IDs are local to each interaction; the same number in different interactions does not necessarily refer to the same sound unless explicitly stated. In 00.interactions, a mismatch between original WAV filenames and p10 IDs was corrected by mapping files such as 42.wav → Soundin.151 (p10 = 151), etc.

Instrument Calls (p1):

Csound instrument calls in the score must use the standard syntax iN in p1 (e.g., i1), not a bare integer. A correction was noted for 00.score, where “1” appeared in p1 instead of “i1”.

Onset (p2):

The first event in every score must have p2 = 0 to ensure there is no delay at the beginning of the rendered sound file. Subsequent event start times must be greater than or equal to 0, but the earliest onset is fixed at 0.

Tendency Mask Semantics:

The sub-score syntax for random parameters such as p3 was clarified. For example:

p3 rnd uni

mask (0 3) (0 5)

prec 2

indicates that p3 is drawn from a uniform distribution on the range [3, 5] with two decimal places. More complex masks, such as:

p3 rnd uni

mask (0 3 20 8) (0 5 25 30)

prec 2

Define piecewise-constant ranges over score time:

The first mask line specifies the lower bound over time; the second mask line specifies the upper bound. Between breakpoints, bounds remain constant and change abruptly at the specified times; there is no interpolation between mask points. Values are drawn uniformly within the active bounds at each event’s start time.

Naming Conventions and Organization:

To keep materials organized, interactions are indexed with two-digit identifiers (00, 01, 02, …). For each interaction NN, associated files follow a consistent naming scheme, for example:

NN.Interactions – prose/log of the interaction

NN.sub-score – sub-score specification

NN.sco – generated Csound score(s)

NN.orc – orchestra used in that interaction

NN.render – rendered audio

NN.analyses – evaluation and analysis notes

Suffixes or additional indices (e.g., _01, _02) may be used to distinguish multiple scores or renders within a single interaction, while maintaining NN as the primary interaction ID.

Probability of Repeating a Score from a Given Sub-score

It was noted that a given sub-score (e.g., 00.sub-score) can be used to generate very many distinct scores without duplication. Conceptually, for each event there are a finite number of possible values for randomized parameters such as start times, durations, and other p-fields, determined by parameter ranges and precision settings. The number of distinct possible event configurations is the product of these cardinalities, and the number of possible scores is this product raised to the power of the number of events. Even with conservative assumptions, this yields an astronomically large number of possible scores. Consequently, the probability of generating exactly the same score twice from a rich sub-score is effectively zero for practical purposes, though an exact figure would require the explicit 00.sub-score to compute.

Planning for 01.interactions

The session concluded with a plan to proceed to 01.interactions. Dr. Rhoades will provide: (1) four new base samples, properly named (Soundin.xxx), for analysis and correlation with the next steps, and (2) a 01.sub-score to serve as the structural basis for generating 01.sco. The generation of 01.sco will incorporate all clarified rules and preferences from interaction_00, including correct instrument syntax, onset behavior, density modulation, and mask interpretation. Evaluations of 01.render(s) will then feed back into the ongoing Bayesian-style refinement of the system.