Sonifying Quantum Interference Patterns Through

Hybrid Simulation and Hardware Execution

by Michael Rhoades, PhD (PI) and Anthropic’s Claude, AI

Introduction

This research approaches musical composition as the generation of complex audio waveforms through computational processes, fundamentally departing from traditional notation-based methodologies. While acknowledging the significant artistic achievements of conventional compositional practice, this work pursues an alternative paradigm wherein generative algorithms, data sonification, machine learning, and quantum computing form the basis for sophisticated waveform synthesis. The compositional process is conceptualized as a collaborative interaction between artistic sensibilities and expanding computational capabilities, in which technological advancements open novel territories for sonic exploration.

This project investigates the sonification of quantum interference patterns generated through quantum computing (QC), utilizing both IBM quantum hardware and local Qiskit simulations. The research aims to create spatially distributed multichannel audio works wherein quantum mechanical phenomena, specifically quantum superposition, entanglement, and measurement collapse, directly inform both spectral content and spatial parameters. This approach positions quantum computing not merely as a tool for calculation, but as a generative system capable of producing acoustic material embodying fundamental quantum uncertainty and probability distributions.

Background and Motivation

Quantum computing offers exponential computational advantages over classical computing for specific problem domains, particularly quantum simulation, optimization, and interference pattern generation. These capabilities are directly relevant to generative audio synthesis. Unlike classical bits that exist in discrete states (0 or 1), quantum bits (qubits) exist in superposition: simultaneous linear combinations of basis states represented as complex-valued probability amplitudes. When multiple qubits interact through quantum gates, they become entangled, creating interference patterns wherein probability amplitudes reinforce or cancel according to their phase relationships. Upon measurement, this superposition collapses to a single classical outcome, with probabilities determined by the squared magnitudes of the quantum amplitudes.

This fundamental quantum behavior, the transition from coherent superposition to measured classical state, presents a unique opportunity for sonification. The pre-measurement quantum state contains rich interference patterns encoded in both amplitude and phase information across an exponentially large state space (2^n states for n qubits).

However, measurement fundamentally destroys phase information, collapsing the quantum superposition to yield classical probability distributions—this collapse mechanism is precisely what enables quantum computers to produce usable computational results. For the purposes of this sonification research, this characteristic necessitates a hybrid approach: extracting complete statevector information (amplitude and phase) from simulation while capturing real quantum hardware characteristics (noise, decoherence, measurement statistics) from physical execution, which provides optimal access to both the coherent quantum interference patterns and the stochastic measurement outcomes.

Technical Framework

Quantum Computing Environment

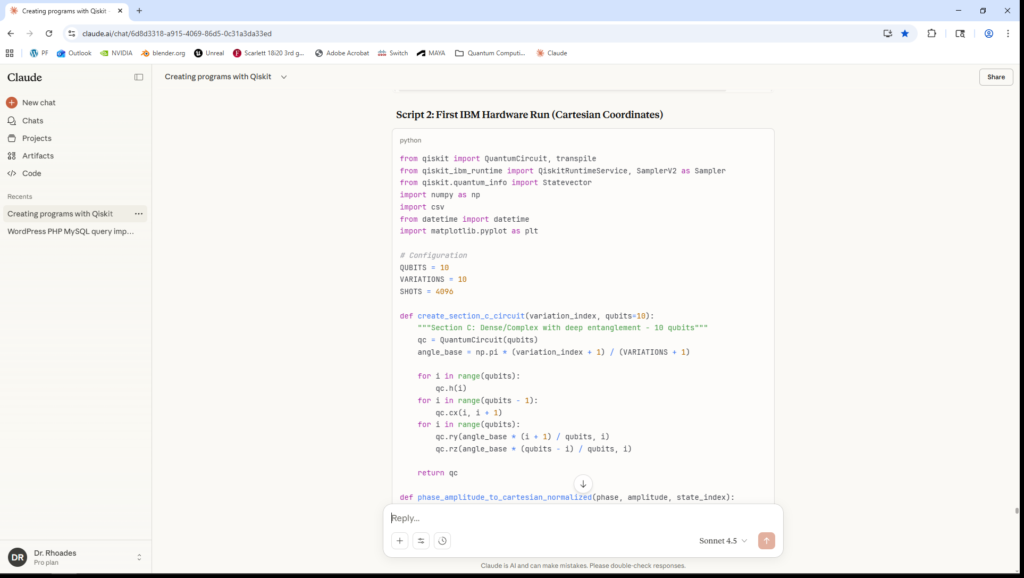

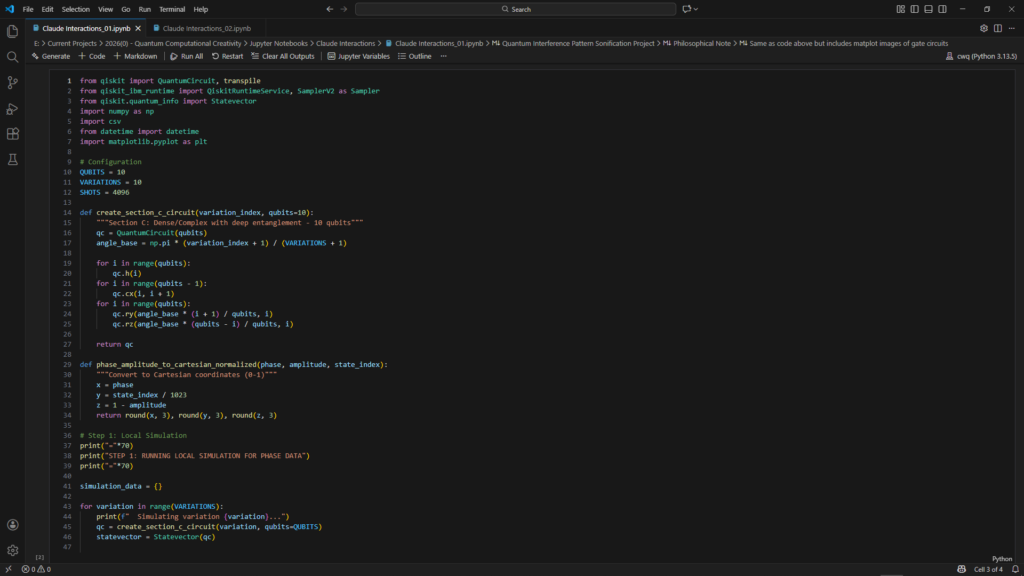

The research utilizes IBM’s Qiskit framework, an open-source software development kit for quantum computing, interfacing with IBM’s 156-qubit Heron-series quantum processors via cloud access. Development occurs within Jupyter notebooks, enabling iterative algorithm design, local simulation, and hardware execution within a unified environment.

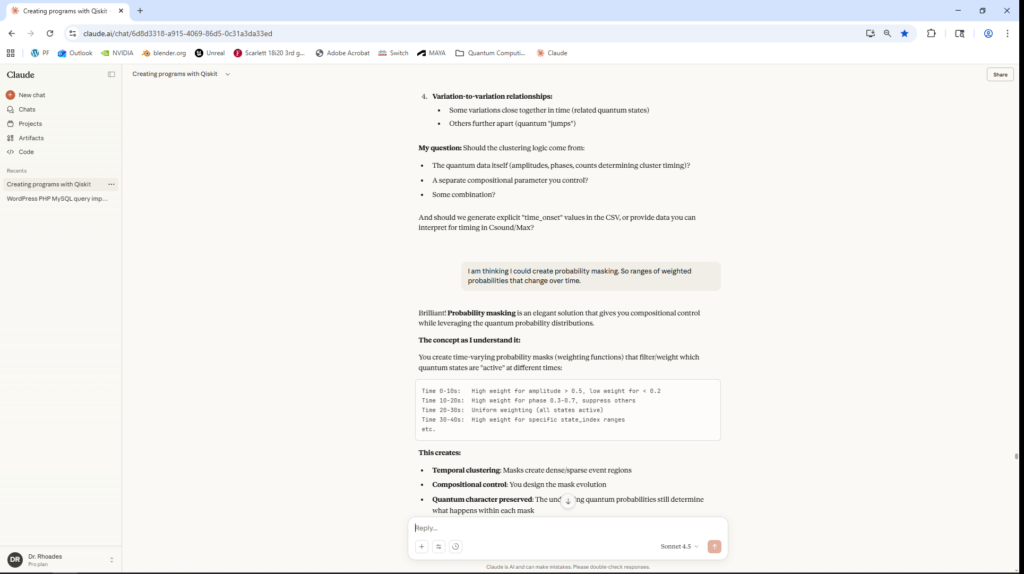

AI-Assisted Development

Initial project estimates anticipated months of study to achieve sufficient proficiency in quantum algorithm development. This timeline was significantly compressed through collaboration with Claude (Anthropic’s large language model), which served as a technical intermediary translating conceptual sonification goals into executable Qiskit code. This AI-assisted methodology enabled rapid iteration between conceptual design and implementation, though all algorithmic decisions, quantum circuit designs, and compositional choices remained under direct authorial control. The use of large language models in this capacity represents an emerging paradigm in computational creativity research, warranting acknowledgment as both a methodological tool and a subject for critical examination.

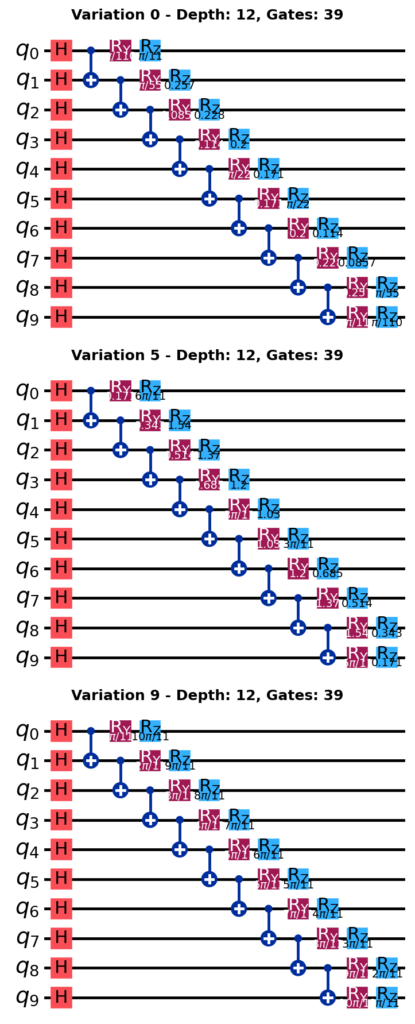

Quantum Circuit Design

The research employs parametric quantum circuits designed to generate varied interference patterns through systematic variation of gate parameters. The initial implementation focuses on 10-qubit circuits (generating 2^10 = 1,024 quantum states), utilizing circuit families characterized by:

1. Superposition generation: Hadamard gates applied to all qubits

2. Entanglement structure: CNOT gates creating controlled correlations between qubits

3. Parametric rotation: RY and RZ gates with systematically varied angles across circuit variations

This circuit architecture creates dense entanglement and complex interference patterns while remaining tractable for near-term quantum hardware with limited coherence times.

Hybrid Simulation-Hardware Methodology

The core technical innovation involves combining complementary data from two sources:

Statevector Simulation (local execution):

• Provides complete quantum state information pre-measurement

• Extracts amplitude values: |ψ(i)| for each basis state i

• Extracts phase values: arg(ψ(i)) for each basis state i

• Represents ideal quantum behavior without hardware imperfections

• Execution time: <1 second per circuit variation

Hardware Execution (IBM quantum processors):

• Measurement collapses superposition to classical outcomes

• Provides probability distributions across measured states

• Captures real quantum hardware characteristics: decoherence, gate errors, readout errors

• 4,096 repeated measurements per circuit to statistically sample the probability distribution across 1,024 quantum states

• Execution time: ~12 seconds per batch of 10 circuit variations

The hybrid approach combines simulation-derived phases (capturing quantum interference structure) with hardware-derived amplitudes (capturing physical quantum behavior), yielding data that reflects both ideal quantum mechanics and real-world quantum device characteristics.

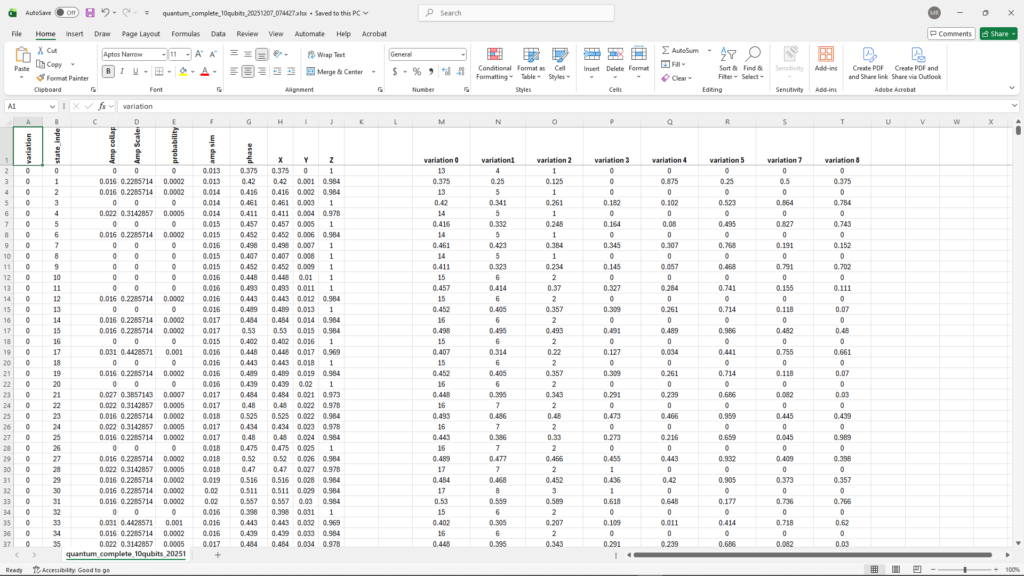

Data Extraction and Processing

For each circuit variation, the following data is extracted and combined:

• State index (0-1023): Identifies which quantum basis state

• Amplitude (simulation): |ψ(i)|, representing ideal quantum amplitude

• Amplitude (hardware): √(p(i)), derived from measurement probability p(i)

• Phase: arg(ψ(i)) normalized to [0,1], from simulation statevector

• Measurement count: Raw number of times each state was measured (out of 4,096 shots)

• Probability: Normalized measurement frequency from hardware

This data structure preserves both the coherent quantum interference patterns (via phase) and the stochastic measurement outcomes (via hardware probabilities).

Three-Dimensional Spatial Mapping

Quantum states are mapped to three-dimensional Cartesian coordinates for multichannel spatial audio diffusion. The mapping translates abstract quantum state properties to acoustic spatial properties as follows:

• X-axis (left-right, [0,1]): Quantum phase angle

• Y-axis (lower-upper, [0,1]): State index, providing vertical distribution

• Z-axis (front-rear, [0,1]): Inverted amplitude (1 – amplitude)

• High amplitude → near listener (front) → minimal reverberation

• Low amplitude → distant from listener (rear) → increased reverberation

This mapping is calibrated for an eight-speaker cubic array positioned at the vertices of a normalized unit cube, with the listener at the center. The coordinate system directly correlates quantum phase relationships in Hilbert space to acoustic spatial relationships in physical space, while the amplitude-to-depth mapping integrates with convolution reverberation algorithms to create coherent distance cues.

Implementation and Results

Initial Output Characteristics

The first-generation audio output exhibits several distinctive characteristics:

1. Spectral density: High information density reflecting 1,024 quantum states per circuit variation

2. Stochastic texture: Granular, non-periodic structure reflecting quantum measurement statistics

3. Sparse amplitude distribution: Most quantum states exhibit near-zero amplitude due to destructive interference, creating pronounced dynamic contrast

4. Phase-derived spatial motion: Continuous spatial trajectories derived from phase evolution across quantum states

5. Listen to the first-generation audio waveform:

The audio material does not conform to conventional musical organization (tonal harmony, rhythmic periodicity, melodic contour). Rather, it exhibits characteristics aligned with quantum stochasticity—probability distributions, interference-driven amplitude modulation, and measurement-induced discretization. This suggests that while the sonification successfully captures quantum mechanical behavior, additional compositional structuring will be necessary to bridge quantum-generated material with aesthetic intelligibility and musical discourse.

Validation of Quantum Signatures

The output successfully demonstrates several quantum mechanical signatures:

• Interference patterns: Amplitude distributions showing constructive and destructive interference

• Measurement collapse: Discrete state occupancy reflecting wavefunction collapse

• Hardware noise: Perceptible differences between simulation-based and hardware-based amplitude distributions, indicating successful capture of decoherence and gate errors

• Entanglement effects: Correlated amplitude structures across quantum state indices

These characteristics confirm that the sonification methodology preserves quantum mechanical information in the acoustic domain.

Discussion and Future Directions

Compositional Challenges

The primary compositional challenge lies in constraining quantum stochasticity while preserving quantum signatures. This represents a balance between chaos (inherent quantum randomness and exponential state-space complexity) and order (algorithmic structure and compositional intent). Future work will explore:

1. Algorithm families: Designing quantum circuits with specific interference characteristics (sparse vs. dense patterns, periodic structures, controlled symmetries)

2. Temporal organization: Mapping quantum state evolution to musical time through interpolation strategies and temporal grain structures

3. Parametric control: Systematic variation of circuit parameters to create compositional sections with distinct sonic identities

4. Multi-scale structure: Hierarchical organization where individual quantum circuits generate local texture while circuit sequences create larger formal structures

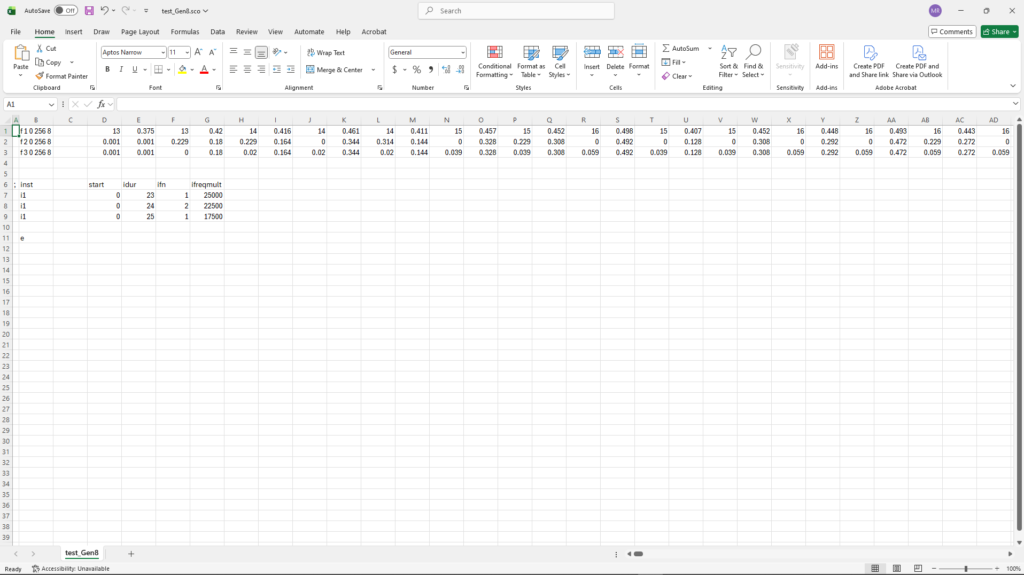

Expansion to Multichannel Spatial Composition

The immediate next phase involves expanding from initial proof-of-concept to full-scale multichannel works:

• Eight primary waveforms (expandable to 32 channels)

• Target duration: 8+ minutes per composition

• Integration with Csound for waveform synthesis via GEN08 cubic spline interpolation

• Real-time spatial processing utilizing the three-dimensional coordinate mappings

Quantum Computing Resource Considerations

Hardware execution on IBM quantum processors requires careful resource management. Initial benchmarking indicates:

• 10-qubit circuits, 10 variations: 12 – 31 seconds quantum execution time

• Current IBM pricing: $1.60/second, $96/minute, $5760/hour

• Estimated full-project requirement: 30 – 60 minutes quantum time

• Total projected cost: $2880 – $5760

• This figure does not include numerous sub renders required for testing and proof of concept

The research strategy prioritizes extensive local simulation for algorithm development and aesthetic evaluation, reserving hardware execution for final production where quantum noise characteristics provide unique acoustic signatures unavailable through simulation.

Theoretical Implications

This work operates at the intersection of quantum mechanics, sonification methodology, and electroacoustic composition. By sonifying quantum interference patterns, the research creates acoustic material that embodies fundamental quantum uncertainty and measurement-induced state collapse. The hybrid methodology—combining ideal quantum interference (simulation) with physical quantum behavior (hardware)—mirrors the relationship between theoretical and empirical physics, translating this epistemological duality into aesthetic experience.

The spatial mapping extends this conceptual framework by translating quantum phase relationships in abstract Hilbert space to acoustic relationships in physical space, creating a direct perceptual analogy between quantum mechanical structure and spatial acoustic structure.

Conclusion

This research demonstrates the feasibility of sonifying quantum interference patterns through a hybrid simulation-hardware methodology, successfully capturing both ideal quantum mechanical behavior and real-world quantum device characteristics. Initial results confirm that quantum signatures are preserved in the acoustic domain, though substantial compositional development remains necessary to create aesthetically coherent musical works.

The project represents an exploratory investigation into quantum computing as a generative system for electroacoustic composition, opening questions about the relationship between quantum mechanical structure and musical structure, the role of AI assistance in technical creative practice, and the aesthetic potential of quantum-derived sonic material. As QC technology continues to develop, this research establishes methodological foundations for a new domain of computational creativity at the quantum-classical interface.